09 Memory Management

Address¶

| Address | |

|---|---|

| Logical (Virtual) | Address generated by CPU |

| Physical | Address seen by memory unit (one loaded into memory address register) |

Address Space¶

| Address Space | |

|---|---|

| Logical | Set of all logical address |

| Physical | Set of all physical address |

Types of Memory Allocation¶

| Type | 1 chunk of consecutive locations are required? |

|---|---|

| Contiguous | ✅ |

| Non-contiguous | ❌ |

flowchart TB

ma[Memory Allocation] -->

c & nc[Non-Contiguous]

c[Contiguous] -->

spa[Single Partion Allocation] & mpa[Multiple partition allocation]

mpa -->

fsp & dp[Dynamic Partioning]

fsp[Fixed Size Partioning] -->

es[Equal Sized] & us[Unequal Sized]Multiple Partition Allocation¶

When a partition is free, a process is selected from input queue and is loaded to the free partition

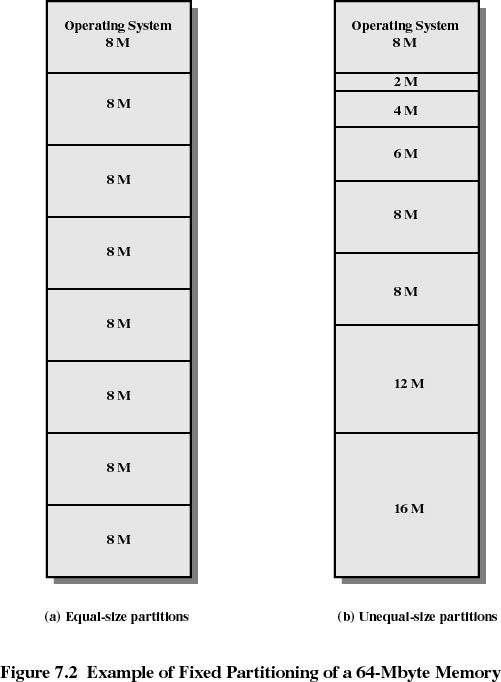

Fixed Size Partitions¶

Internal fragmentation = unused memory within a partition

Equal Fixed Size Partitions¶

❌ Program may be much smaller or larger than the size of the partitions

❌ Internal fragmentation = large

Unequal Fixed Size Partitions¶

❌ Program may be much smaller or larger than the size of the partitions

✅ Internal fragmentation is lower than equal partitioning

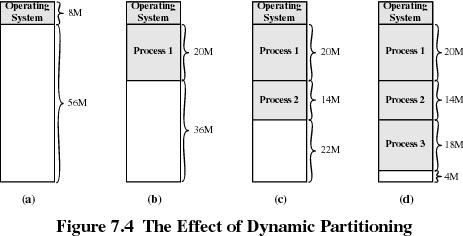

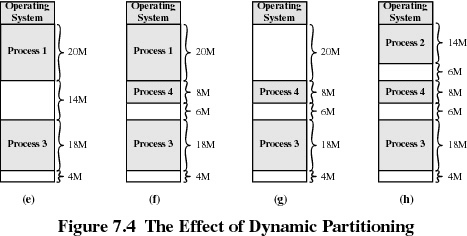

Dynamic Partitions¶

Partitions are created dynamically as process enter and leave main memory

Hole is the block of available memory

❌ Causes external fragmentation (multiple holes generated within the memory)

Allocation Algorithms¶

| Meaning | |

|---|---|

| First-fit | Allocate the first hole that is big enough to accomodate |

| Next-fit | Allocate the hole immediately after the previously alloted location (Subtype of first-fit) |

| Best-fit | Allocate the smallest hole that is big enough to accomodate |

| Worst-fit | Allocate the largest hole available |

Compaction/Defragmentation¶

Computationally-expensive, but allows us to reduce external fragmentation

Move

- allotted partitions beside each other

- holes beside each other

Paging¶

non-contiguous memory allocation technique

To run a program of size \(n\) pages, we need to find \(n\) free frames and load the pages of the program into the frames

used to map/translate logical to physical address

Every process has a page table

frame size = page size \(\in 2^n, n \in Z\)

Page Table¶

Indexed by page number

Stores the frame number/base address of each page in physical memory

No of entries = no of pages

| Page number (acts as index) | Frame no/base address |

|---|---|

| 0 | 0 |

| 1 | 4 |

| 2 | 2 |

| 3 | 7 |

Formulae¶

Address Translation¶

(Diagram from slides)

- Given logical address

- Get page no and page offset

| Page No | Page Offset |

|---|---|

| Bits to locate base address of each page in physical memory | Bits to combine with base address to define the physical address |

-

Get frame no (stored in the page we just accessed)

- \[ \text{Base address} = \text{Frame no} \times \text{Frame size} \]

-

Frame Offset = page offset

| Frame No | Frame Offset |

|---|---|

| Bits to locate base address of each frame in physical memory | Bits to combine with base address to define the physical address |

- \[ \text{Physical address} = \text{Base Address} + \text{Frame Offset} \]

I Missed Something¶

TLB¶

Small, fast-lookup hardware cache called associative memory or translation look-aside buffers (TLBs) are used for implementing Page Table.

64 or 128 locations/entries

Each entry has 2 parts

- key(tag)

- value

When presented with an item, it is compared with all keys simulatneously for a match

If a key is matched, corresponding value is returned

Costly but faster

TLB Hit, TLB Miss

Effective Memory Access Time¶

Memory Protection¶

We achieve this using memory protection bit in the page table (not TLB)

| Memory Protection Bit | |

|---|---|

| Read-only | 0 |

| Read-write | 1 |

Implementation of page table will now be

| Frame No | Memory Protection Bit | |

|---|---|---|

| 0 | 2 | 0 |

| 1 | 4 | 1 |

| 2 | 3 | 0 |

| 3 | 5 | 1 |

Whenever we have a write operation, the OS checks the memory protection bit in the page table

If we try to write into a read-only page of the process, we receive a trap (software interrupt) by the OS