Artificial Neural Networks¶

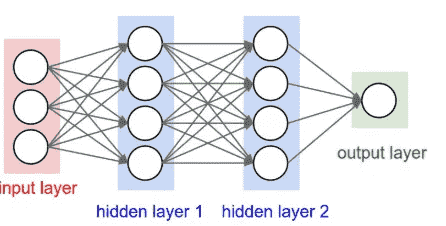

A neural network is simply made out of layers of neurons, connected in a way that the input of one layer of neuron is the output of the previous layer of neurons (after activation)

They are loosely based on how our human brain works.

You can think of a neural network as combining multiple non-linear decision surfaces into a single decision surface.

Hyperparameters¶

- Batch size

- Input size

- Output size

- No of hidden layers

- No of neurons in hidden layers

- Regularization

- Loss function

- Weight initialization technique

- Optimization

- Algorithm

- Learning rate

- No of epochs

Neuron¶

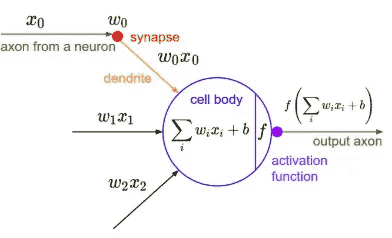

Most basic unit of a neural network

Tasks¶

- Receive input from other neurons and combine them together

- Perform some kind of transformation to give an output. This transformation is usually a mathematical combination of inputs and application of an activation function.

Visual representation¶

MP Neuron¶

McCulloch Pitts Neuron

Highly simplified compulational model of neuron

\(g\) aggregates inputs and the function \(f\) and gives \(y \in \{ 0, 1 \}\)

- \(\sum x_i\) is the summation of boolean inputs

- \(\theta\) is threshold for the neuron

❌ Limitation¶

MP neuron can be used to represent linearly-separable functions

Perceptron¶

MP neuron with a mechanism to learn numerical weights for inputs

✅ Input is no longer limited to boolean values

- \(w_i\) is weights for the inputs

Key Terms for Logic¶

- Pre-Activation (Aggregation)

- Activation (Decision)

Perceptron Learning Algorithm¶

Perceptron vs Sigmoidal Neuron¶

| Perceptron | Sigmoid/Logistic | |

|---|---|---|

| Type of line | Step Graph | Gradual Curve |

| Smooth Curve? | ❌ | ✅ |

| Continuous Curve? | ❌ | ✅ |

| Differentiable Curve? | ❌ | ✅ |

MLP¶

Multi-Layer Perceptron

Simple neural network with 3 Layers

flowchart LR

x1 & x2 -->

h1 & h2 & h3 & h4 -->

y

subgraph il[Input<br />Layer]

x1 & x2

end

subgraph hl[Hidden<br />Layer]

h1 & h2 & h3 & h4

end

subgraph ol[Output<br />Layer]

y

endFor an input layer with \(n\) nodes, we will have

- 1 output

- \(2^n\) nodes in hidden layer

Feed-Forward NN¶

NN (with \(> 3\) layers) where every layer feeds forward to the next layer; backward/self-loop is not allowed

For an input layer with \(n\) nodes, we will have

- \[ hidden layers = \]

-

\(W_i\) is the weights to layer \(i\)

Decision Boundary¶

| Hidden Layers | Shape of Region |

|---|---|

| 0 | Open |

| 1 | Closed/Open |

| \(\ge 2\) | Closed |

As you increase the number of hidden layers, the possibility of open decision boundary decreases (which is good).