07 Advanced RNN

Non-Linear Units¶

Replace \(\phi\) of updates with MLP

✅ Keeps structure of latent space

❌ Costly (due to more complex gradients)

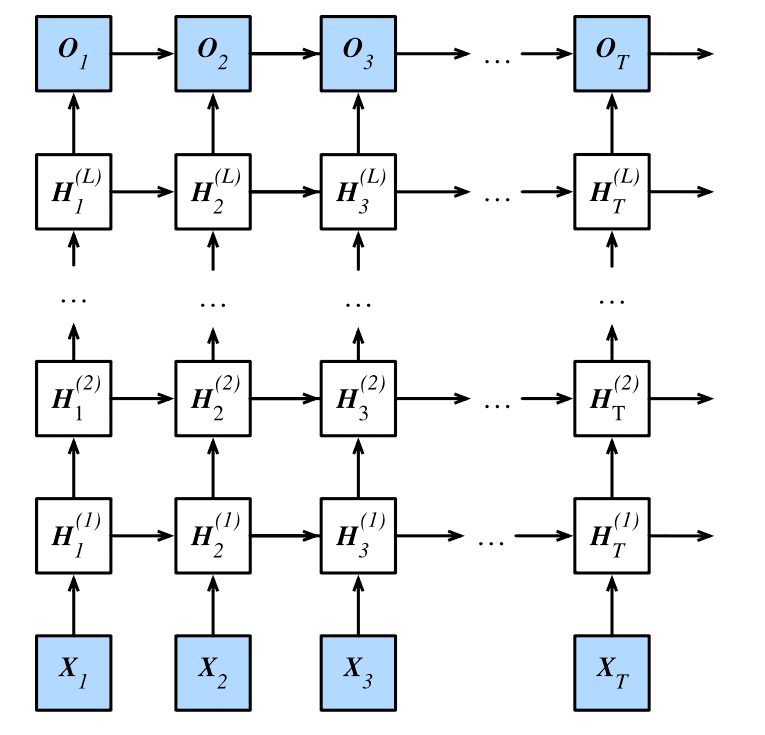

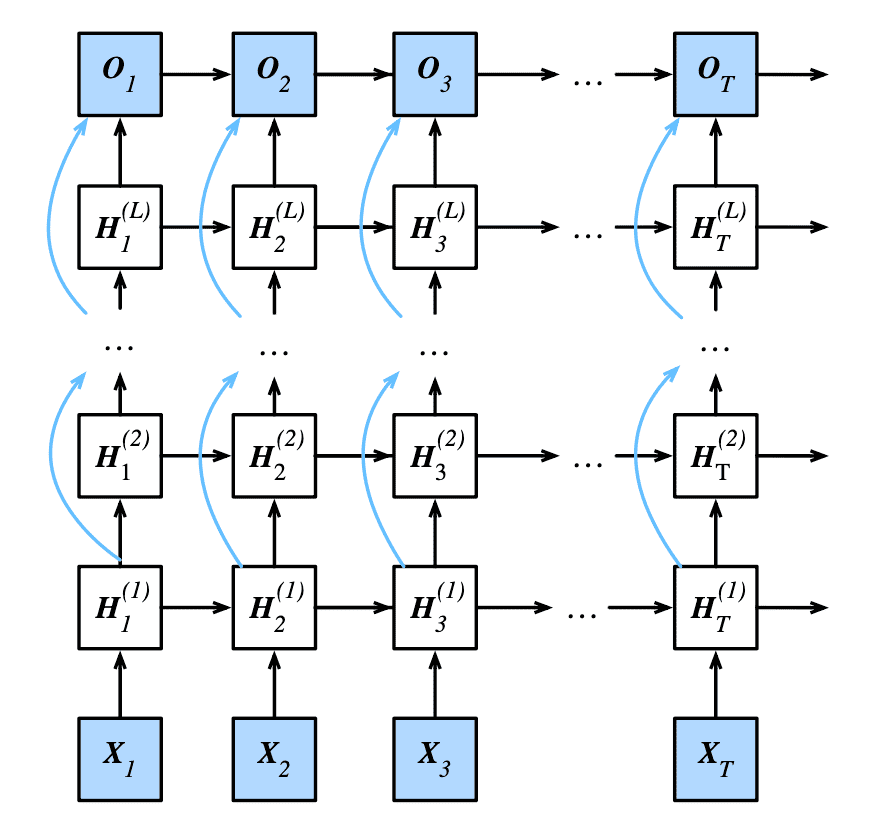

Deep RNN¶

Rather than using just 1 hidden layer, we use more hidden layers, ie, each time stamp of RNN has multiple cells

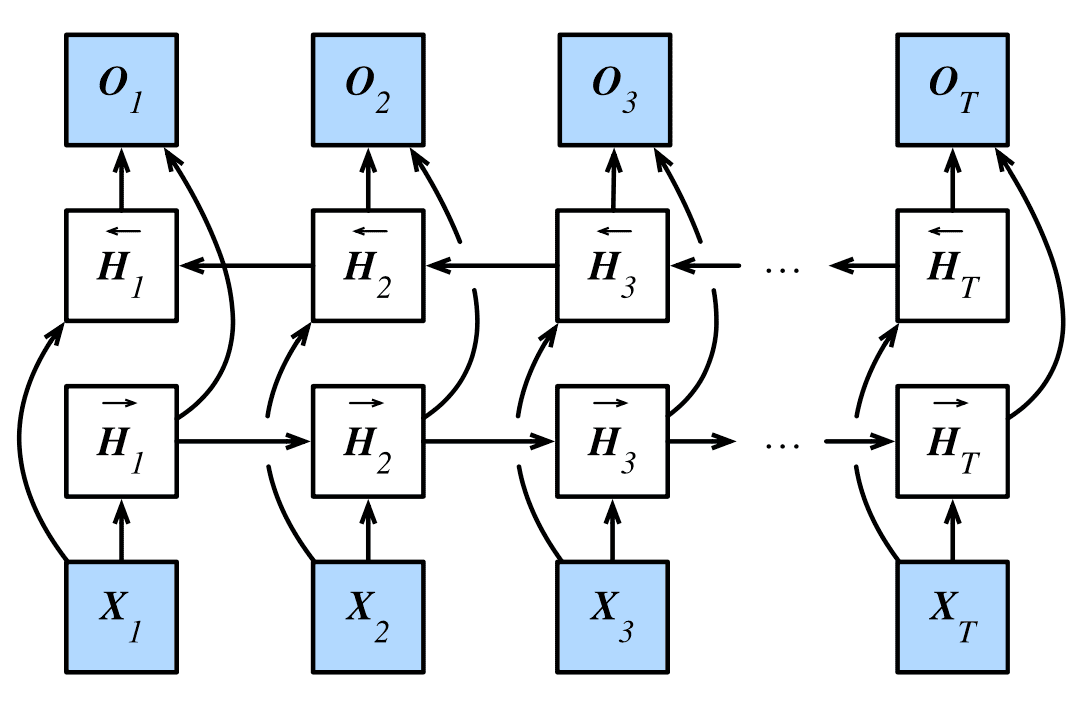

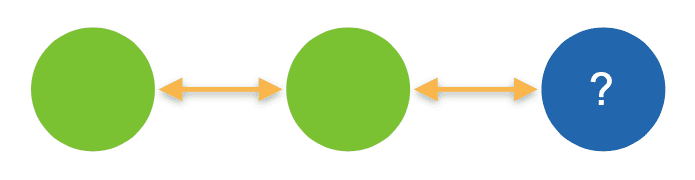

Bidirectional RNN¶

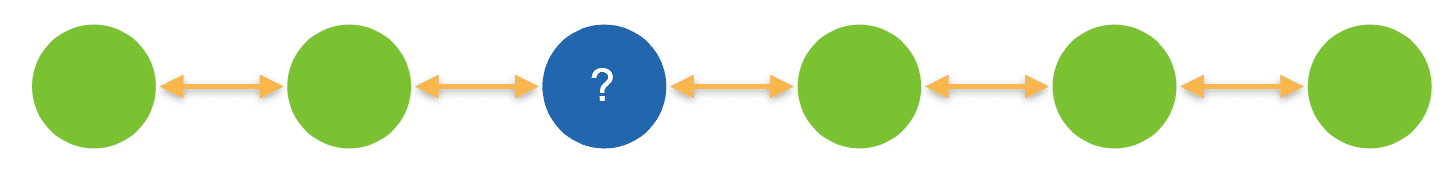

Context¶

- I am happy

- I am very hungry

- I am so hungry, I could eat 2 plates of rice

Very different words to fill in, depending on past and future context of a word

Traditional RNNs only look at the past. In interpolation (fill in) we also use the future.

Implementation¶

- One RNN forward

- One RNN backward

- Combine both hidden states for output generation

idk¶

Bi-RNN does not work for sequence generation

| Training | Testing |

|---|---|

|  |

However, we can still use it to encode the sequence

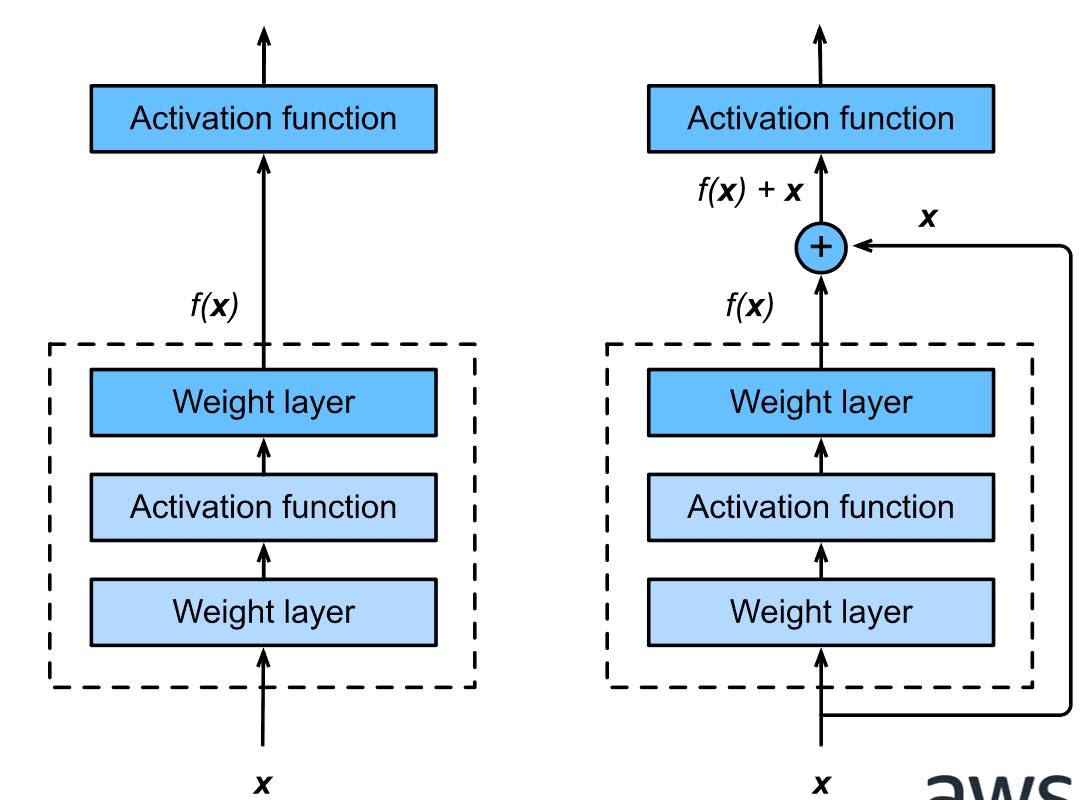

Residual RNN¶

Input of every second layer is also added to its output (residual connection)

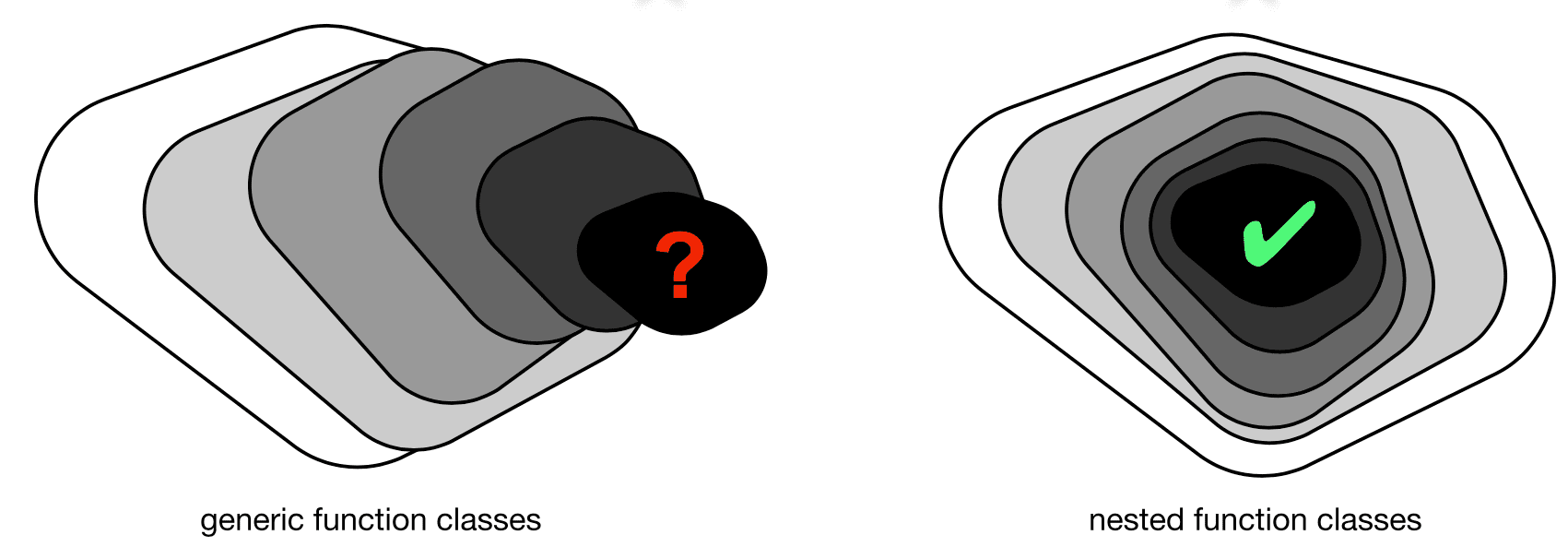

Adding Layers¶

Adding a layer to a model changes function class.

We want to add to the function class, using Taylor expansion style parametrization

Variants¶

- Simple addition

- Nonlinearity before addition

- Could also concatenate

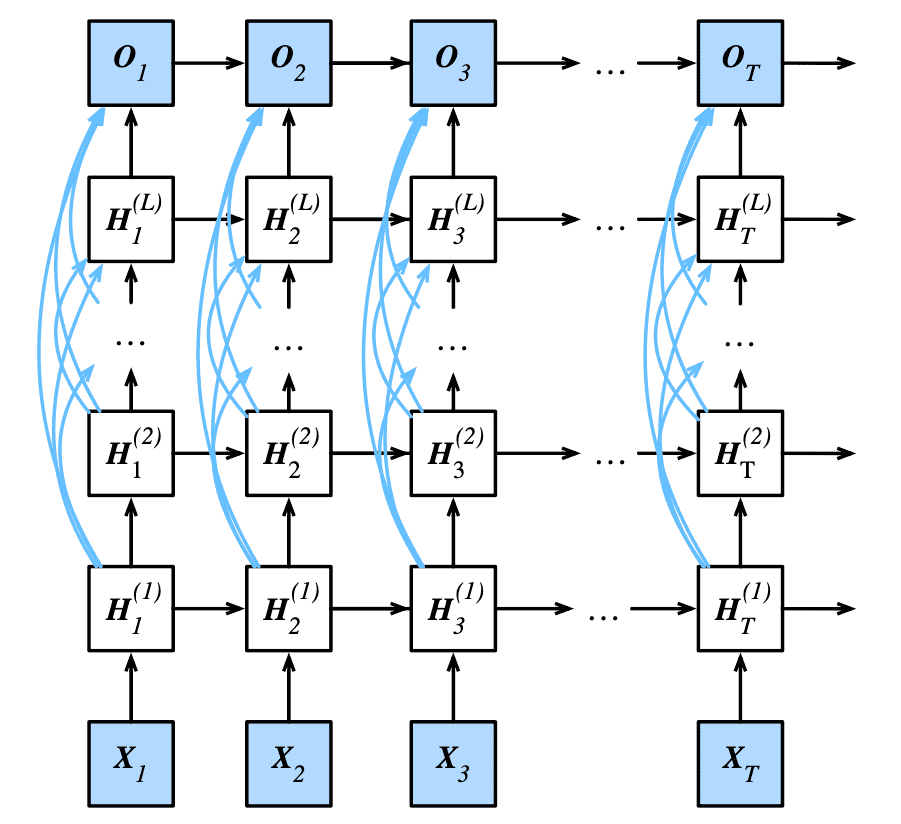

DenseNet RNN¶

Concatenate outputs of previous layers as input to next layer, with occasional transition layers to reduce dimensionality